New Perspective on Autonomous Microscopy in APL Machine Learning

We are delighted to announce the publication of our new perspective piece, "Mind the gap: Bridging the divide between AI aspirations and the reality of autonomous microscopy," now available in APL Machine Learning. This work reflects nearly a decade of dedicated research by our team and esteemed collaborators, offering insights into the dynamic and promising field of autonomous electron microscopy.

This paper is featured in the Special Topic on Machine Learning for Self-Driving Laboratories, and we appreciate the opportunity extended by Shijing Sun to contribute our perspective.

This important work was spearheaded by Grace Guinan and Addie Salvador, exceptional DOE Science Undergraduate Laboratory Internship (SULI) and co-op interns. They skillfully led the overall structure and narrative, as well as writing on atomic-resolution analysis and automation.

From Vision to Reality in AI Microscopy

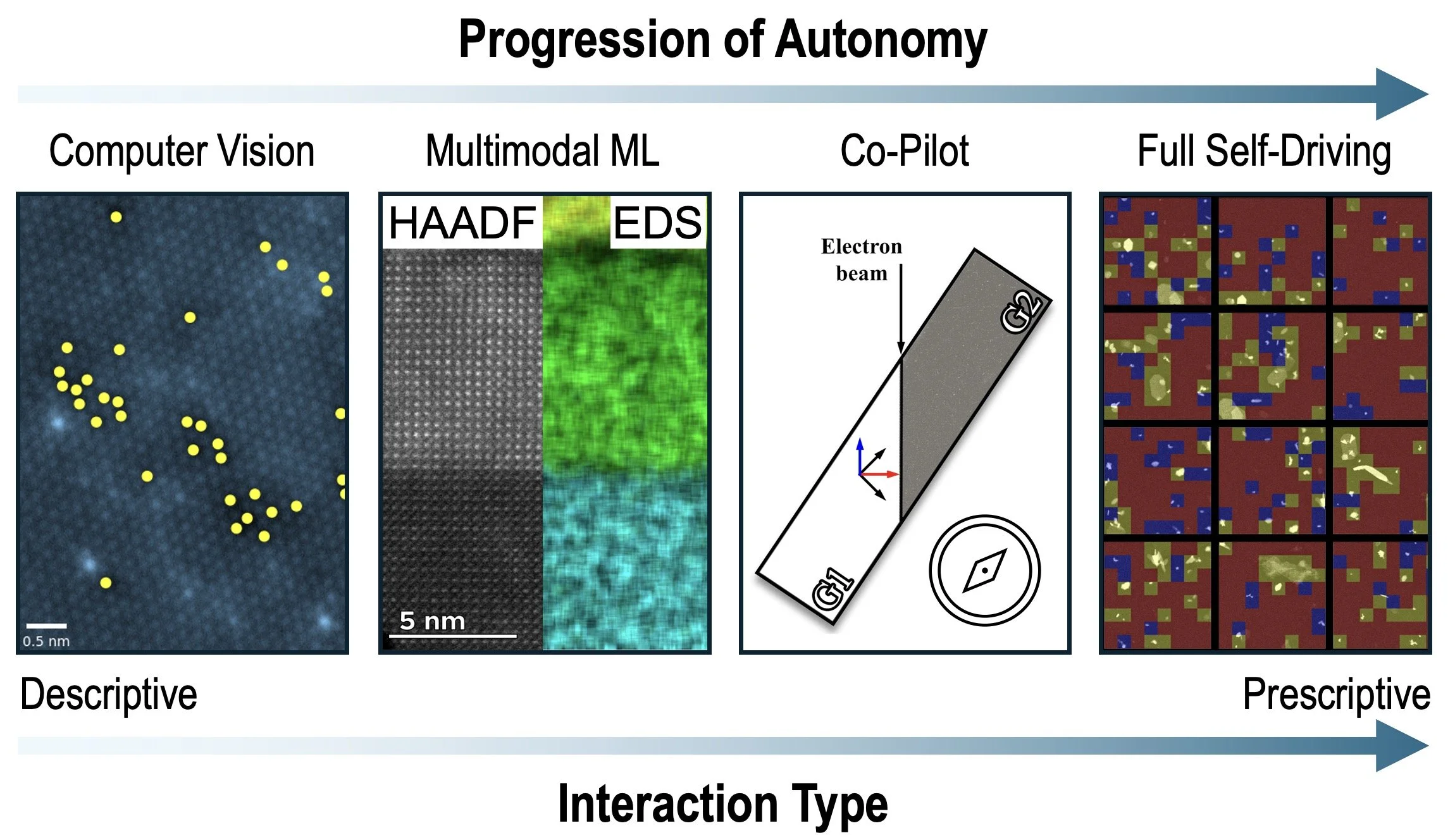

The integration of Artificial Intelligence (AI) holds powerful potential for materials science, especially for electron microscopy, which provides rich, multimodal datasets from the atomic scale upwards. However, translating AI's promise into everyday laboratory practice requires navigating a significant gap between theoretical possibilities and practical implementation. Our perspective aims to bridge this divide. We chart the course from descriptive to prescriptive autonomy, detailing key advancements while openly discussing the practical challenges and future directions needed for robust, real-world autonomous systems.

We highlight progress and key learnings across four primary areas:

Domain-Specific Computer Vision: Developing robust AI tools to statistically map atomic features, such as quantifying point defects in 2D MXene materials, enabling analysis at previously inaccessible scales.

Multimodal Models: Harnessing the complementary power of diverse data streams—like STEM-HAADF imaging and STEM-EDS spectroscopy—to build richer material descriptors and address complex characterization challenges, such as understanding disorder in irradiated oxides.

AI Co-Pilots: Creating intuitive tools like NanoCartographer to assist scientists in navigating the nanoworld, effectively translating high-level materials science queries into low-level instrument control, and promoting FAIR data practices.

Fully Autonomous Systems: Addressing the complexities of building "self-driving" microscopes, implementing ML-driven decision-making, and tackling the engineering hurdles—from software APIs to hardware limitations—to achieve closed-loop experimentation.

An Invitation and a Roadmap

We hope this serves not only as a record of our journey but also as an insightful roadmap for fellow researchers entering or advancing within this field. We've emphasized the practical "how-to" and the real-world obstacles, aiming to demystify autonomy and encourage broader community involvement in developing these transformative tools.

We are genuinely excited about the future of AI-driven characterization and its potential to accelerate materials discovery and design.

Our sincere thanks go to all co-authors—Grace Guinan, Addison Salvador, Michelle A. Smeaton, Andrew Glaws, Hilary Egan, Brian C. Wyatt, Babak Anasori, Kevin R. Fiedler, and Matthew J. Olszta —for their invaluable contributions. We also gratefully acknowledge the support of our funding agencies, particularly the Department of Energy through NREL and PNNL Laboratory Directed Research and Development (LDRD) programs and the National Science Foundation (NSF).

We invite the materials science community to explore our perspective and join the conversation on shaping the future of autonomous experimentation!